Enumerate using Google

Using Google or other search engine we may be able to gather some valuable information. We can search for:

- Config files

- SQL File

- Username, Private keys, even passwords

- Error messages

- Any other technical messages

Mostly i use following queries:

#Find pages

site: site.com

#Find Subdomain

site: site.com -www

#Find files php/jsp/aspx/asp/cfm/sql

site: site.com filetype:php

#Find the page if match keywords in title

site: site.com intitle: admin login

#if the title match our keyword

site: site.com intitle: "index of backup.php"

#Find files containing passwords

intitle: "Index of ftp passwords"

#Find page if url has our keywords

site: site.com inurl:?id=

#pages containing login

site: site.com inurl:admin/reset.php -github

For more Google Dorks: Google Hacking Database!

Gather info from Social Site

Basically, I would search for Employee details, Technical post, and some other inofrmation.

What we can do with those information?

- Getting idea about the company

- Social Engineering

- Username/Password Generate

If we found a employee name, we can search that name on Google, Peoples directory to find more about him.

Example query:

- LinkedIn -

site: linkedin.com intitle: Employee Name - Twitter -

site: twitter.com intitle: Employee Name - Facebook -

site: facebook.com intitle: Employee Name - Google -

'Employee Name Company_name' - Get Employee List of the company from LinkedIn

Banner Grabbing

Banner Grabbing is useful to find existing vulnerability.

WhatWeb

whatweb domain.com

Nmap

nmap -v -p80,443 -sV domain.com

Netcat

nc -vvv domain.com 80

HEAD / HTTP/1.1

Send malformed request:

nc -vvv domain.com 80

GET / BADBOY ISHERE/1.1

Explore Target site

DNS Enumeration

Retrieve Common information:

#Check if robots.txt exist

curl -O -Ss http://www.domain.com/robots.txt

#Get IP address

nslookup domain.com

#Get IP, NS, MX etc

nslookup -querytype=ANY domain.com

#Same thing as nslookup using $ host

host domain.com

host -t ns domain.com

host -t mx domain.com

#Zone Transfer

host -l www.domain.com ns1.domain.com

Reverse Lookup with Bash

for iplist in $(seq 190 255); do host x.x.x.$iplist; done | grep -v "not found"

DNS Enumeration Tools

Note: Any newly found virtual host is a important. Other Virtual could be vulnerable If even main domain not vulnerable which could allow us to move to different virtual host.

#Zone Transfer and Brute force subdomain

dnsenum redtm.com

#Zone Transfer and Brute force subdomain

dnsreecon -a -d redtm.com

#Test for zone transfer and brute force dns

fierce --domain redtm.com

#search for virtual host, brute force dns, also look at google

theHarvester -d redtm.com -v -c -b google

Screenshot of theHarvester:

Enumerate Applications

Scan port

nmap -v -Pn -p- -sV domain.com

Manually connect to every port for banner grabbing

nc -vvv target.com 80

if any none standard http port open, explore:

www.target.com:8080

See how the URL is structured. For example:

#If we have this url

www.target.com/userLogin

#Then Try

www.target.com/adminLogin

Check Digital Certificates manually for informations such as as email and using sslyze

sslyze redtm.com

Check other data on the site:

- HTTP Headers - We may get some valuable information like framework version

- Review HTML Source Code - Check for comments and source code structure, may reveal what is being used or even other sensitive info

- Cookies - Cookie structure may tell us what is being used. Such as

PHPSESSIONIDclearly indicate PHP is there! - Known files and directories - How about trying some know files or directory?

/wp-admintell us it is Wordpress - Error Message - This may reveal internal path, username or other sensitive info. Try to browse something like

/config.phporconfig.php?id[]=449

Enumerate Files and Username

Crawling and File Fuzzing is one of the most important part of web enumeration. What we should search for?

- Find all GET/POST method parameters

- Brute Directory and Files

Nikto

Nikto is a popular web server scanner. It searches for dangerous files and some common vulnerabilities

nikto -h redtm.com

Burp Suites

Crawling

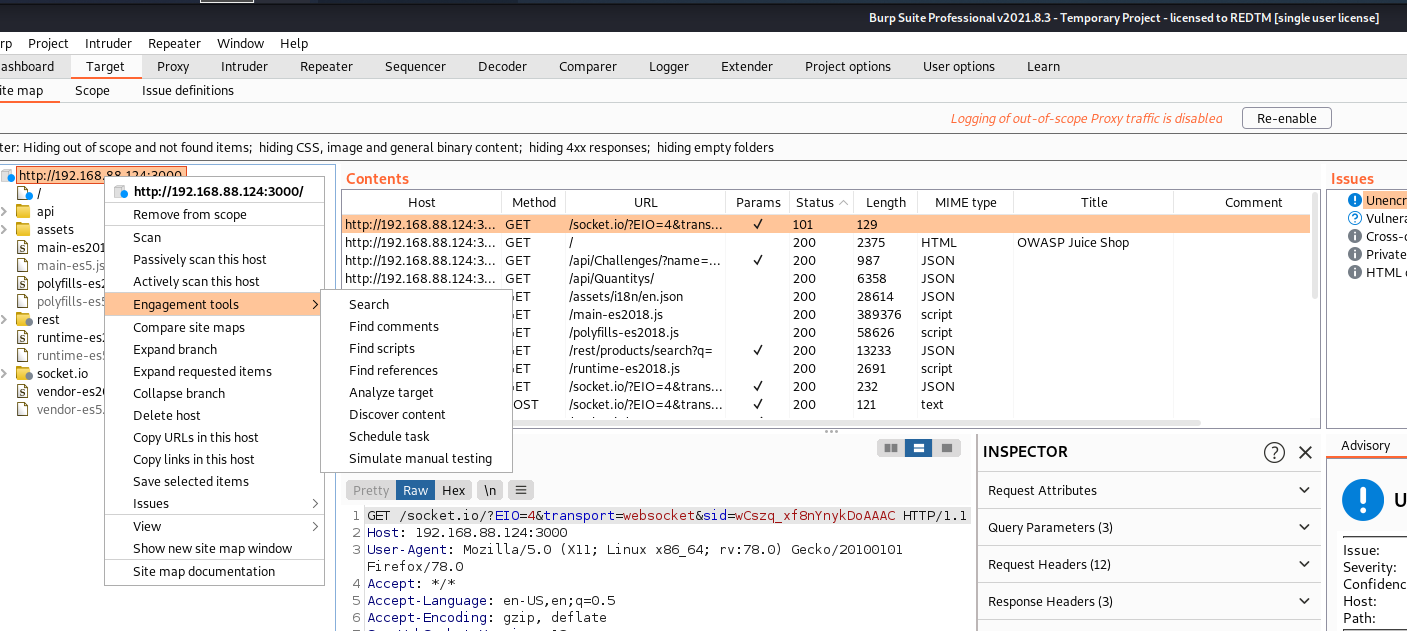

Crawl Using Burp Suite Pro

- Intercept the target

- Right click on the target address.

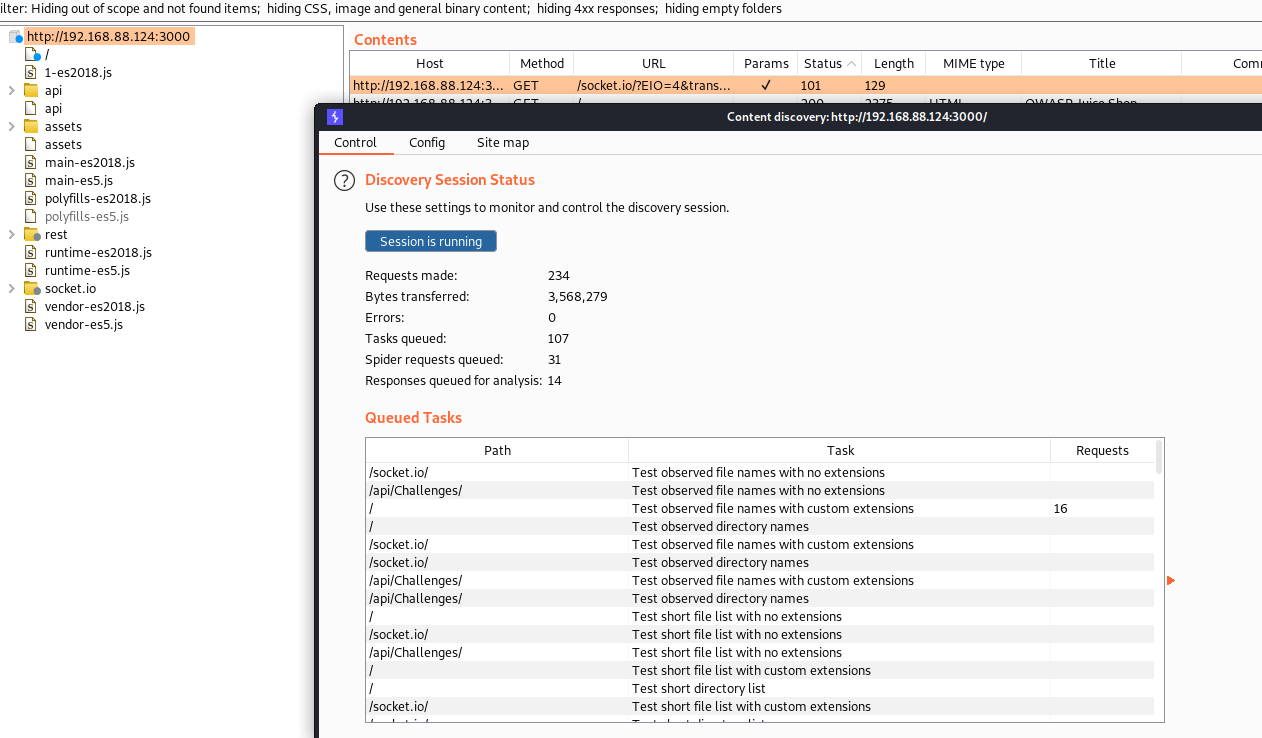

- Engagement Tools>Discover Content

- Click on “Session is not running”

Now what?

- Check all interesting links after crawling and find url parameters

- Manually visit the site, submit form to capture the parameters

Directory Brute Forcing

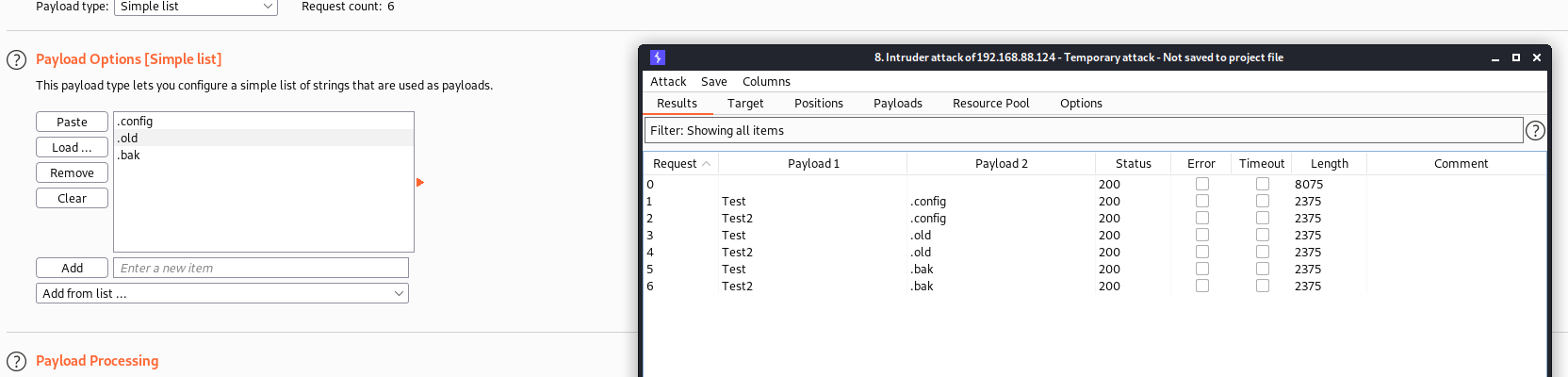

First, Send the target root directory / to Intruder and clear all attack points. And newly create attack point as below

GET /§§ HTTP/1.1

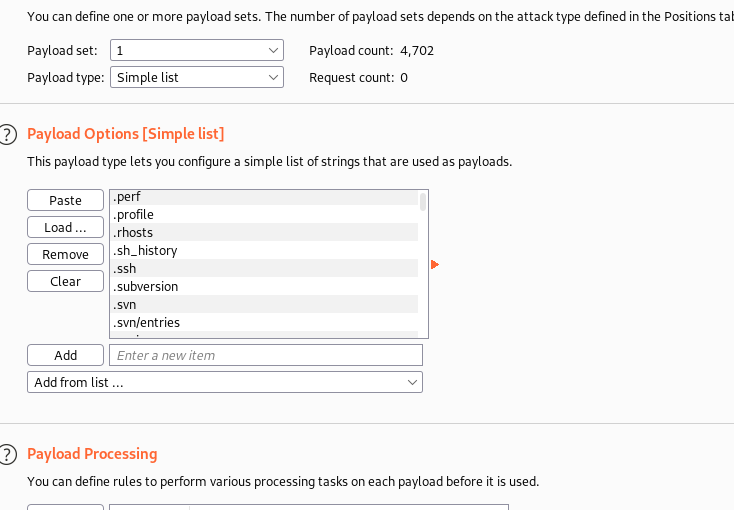

Files Brute Forcing

GET /§name§.§extension§ HTTP/1.1

Select attack type Cluster bomb

Go to Payloads Tab, Set payload set to 1 and load the common directory by click on Load button in the Payload Options section.

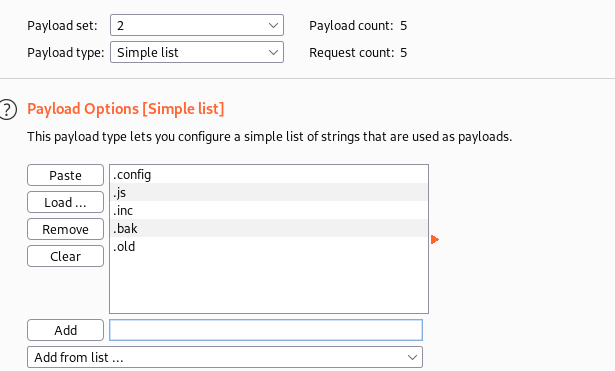

Next set the payload set to 2 and provide file extension:

Click on Start Attack

GoBuster

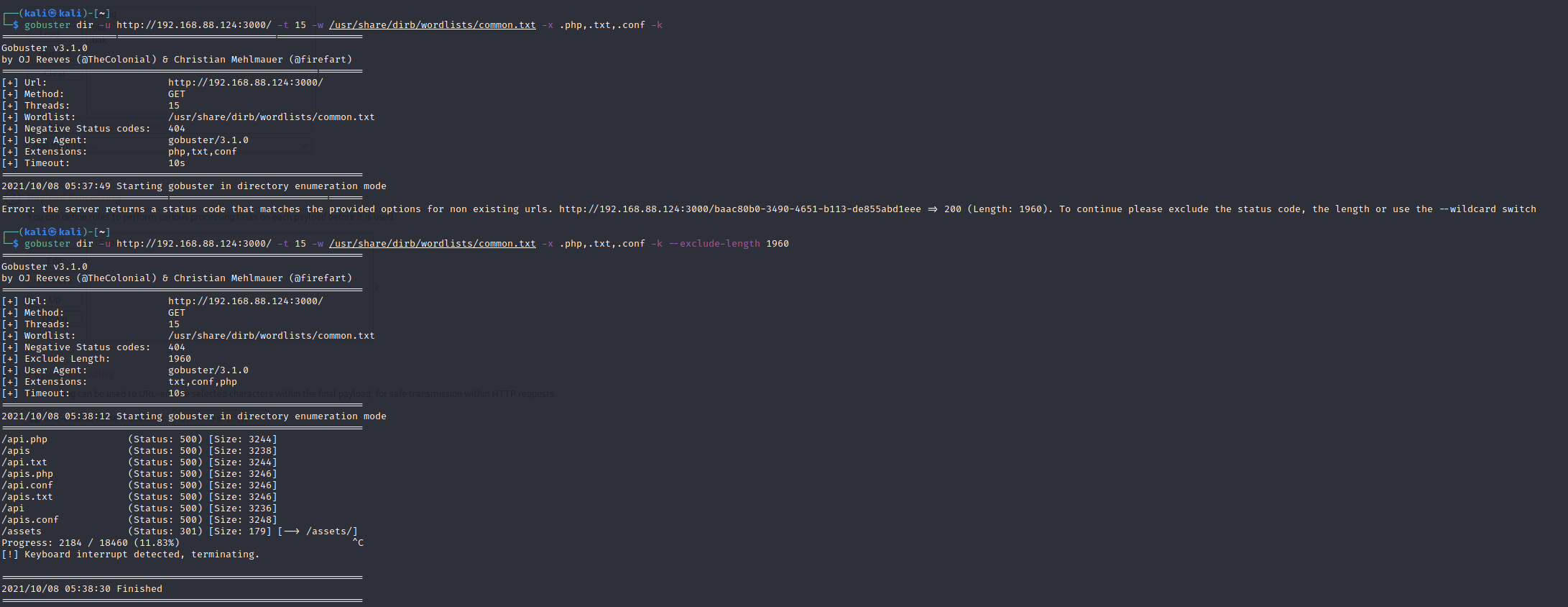

Another free tool i use is gobuster to find hidden files and folder:

gobuster dir -u https://host/ -t 15 -w /usr/share/dirb/wordlists/common.txt -x .php,.txt,.conf -k

If we get error something like:

Error: the server returns a status code that matches the provided options for non existing urls. http://192.168.88.124:3000/baac80b0-3490-4651-b113-de855abd1eee => 200 (Length: 1960). To continue please exclude the status code, the length or use the --wildcard switch

Try with exclude-length:

gobuster dir -u http://192.168.88.124:3000/ -t 15 -w /usr/share/dirb/wordlists/common.txt -x .php,.txt,.conf -k --exclude-length 1960

Now what?

- Use these info to find Auth, Mis-configuration, Business logic, or Injection vulnerabilities.

- Make an effective password attack plan.

- Plan a good social engineering attack.

Without information gathering and enumeration an effective plan is never possible!